I got some troubles proving following theorems:

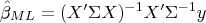

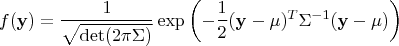

1) In linear regression model with DEPENDENT normally distributed errors (with covariance matrix

) the maximum likelihood estimation of

) the maximum likelihood estimation of  gives

gives

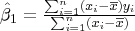

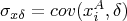

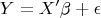

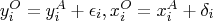

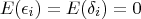

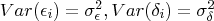

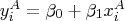

2) Let

be estimated by least squares method, let

be estimated by least squares method, let  ,

,  ,

,  .

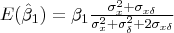

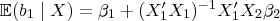

. Show that

My progress - in the second one, simply putting all the things I know to the result and hoping that it will give expectation of the

simply did not work. For the first one I don't know what to try, because I'm not even sure how the likelihood function looks like when we got dependent errors.

simply did not work. For the first one I don't know what to try, because I'm not even sure how the likelihood function looks like when we got dependent errors.

and

and  ? What is

? What is  ?

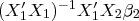

? where

where  contains all the other independent variables that we ignore and write

contains all the other independent variables that we ignore and write  . Expand out

. Expand out  in the formula for ordinary least-square and take (conditional) expectation (so the

in the formula for ordinary least-square and take (conditional) expectation (so the  disappears). The result is the so-called omitted variable (bias) formula

disappears). The result is the so-called omitted variable (bias) formula

is precisely the weighted proportion of the omitted variables

is precisely the weighted proportion of the omitted variables  we included.

we included.

so it will be simpler and then derivate and solve first order conditions for maximum, right?

so it will be simpler and then derivate and solve first order conditions for maximum, right? be observed values for

be observed values for  and let

and let  be the close actual values, close means

be the close actual values, close means  , where

, where  and

and  . We want to model

. We want to model  , but we observed values

, but we observed values

,

,  ,

,  and

and  so plugging into the omitted variable formula gives

so plugging into the omitted variable formula gives  .

.